01. THE_CHALLENGE

Healthcare data is notoriously fragmented, siloed, and unstructured. Delivering "precision medicine" requires not just advanced AI models, but a robust infrastructure capable of ingesting, normalizing, and analyzing diverse data streams—from EHR records to genomic sequencing—in real-time.

The mission was to build a global interoperability layer that could:

- Harmonize data from disparate systems using FHIR/HL7 standards.

- Deploy predictive models to identify high-risk patients early.

- Ensure strict compliance with GDPR, HIPAA, and local data sovereignty laws across the US and Europe.

02. THE_SOLUTION

I led the development of a modular AI platform that integrates directly with clinical workflows. By combining a scalable data lakehouse architecture with edge-deployed inference models, we achieved real-time insights without compromising data security.

Interoperability Engine

A custom ingestion pipeline that normalizes data from over 50 different EHR systems into a unified FHIR-compliant format, enabling true cross-system analytics.

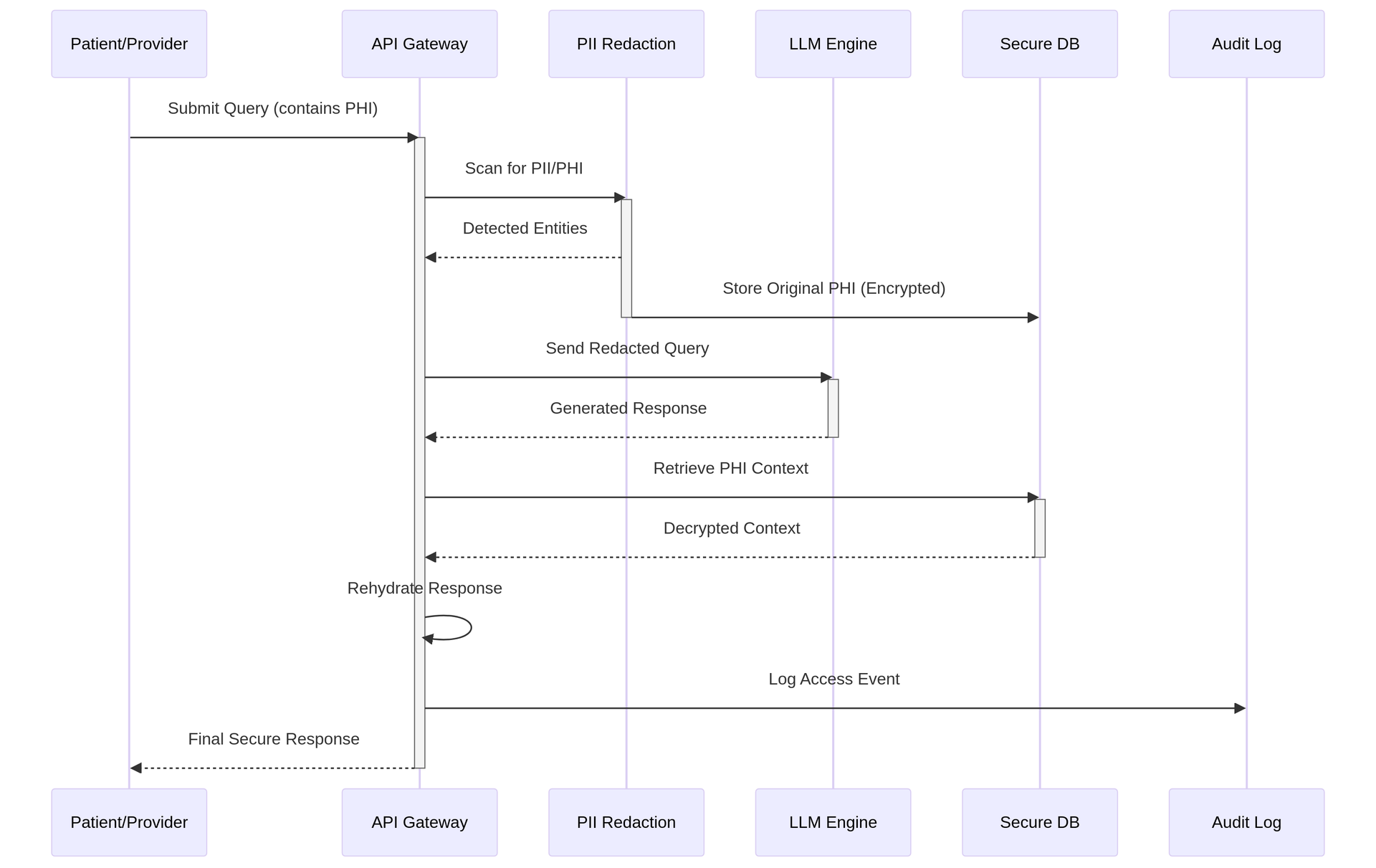

SECURE_DATA_FLOW_SEQUENCE

Figure 1: HIPAA-Compliant PII Redaction & Rehydration Flow

Predictive Analytics

Deployed LLM-based risk scoring models that analyze patient history to predict readmission risks with 85% accuracy, allowing providers to intervene proactively.

Secure Data Lake

Architected a federated data storage solution that keeps patient data in its region of origin while allowing aggregated, privacy-preserving model training.

Automated Workflows

Replaced manual chart reviews with AI-driven extraction, reducing administrative burden by 40% and freeing up clinicians to focus on patient care.